Building AI Agents

Our transition from heuristic to goal-based software is just beginning, and while we have lessons from the content-era (search, ads, feed) on applying predictive ML to consumer facing products, GenAI opens up a whole new vector of product application of Machine Learning (AI) tech.

Traditional software practices focus on deterministic requirements such as scale, latency, and correctness. Agent systems operate in the ML Software paradigm. They interact with the world through a probabilist, Maximum-Likelihood Estimation approach, with an ability to continue refining and improving its logic.

AI Agent tech - software that has the agency to interact, reason, and make decisions - is nascent. We do not have scar-tissue of lessons from the field and winning design patterns have yet to solidify. This post is an attempt to articulate our early thinking and provide a reference to those starting out exploring Agentic AI.

The core capabilities of LLM technology can be summarized as: language-interface, reasoning engine, and a knowledge-base. We will use these primitives to compose an Autonomous Software Worker.

Why build Autonomous Software Workers?

The promise of AI agents is to free people from the mundane to focus on more creative and abstract problem-solving. But that’s not all. The bigger opportunity is to be 1000x more effective - they’d carry out jobs with the agility and depth that human workforce can’t attain.

For example, here are some workflows and processes that people would rather delegate to a software agent.

Engineering - Our job is to produce software. Yet, a good chunk of developer’s time goes into operational activities and KTLO (Keep The Lights On) that few truly enjoy.

- On-calls - Who loves being available 24/7? Instead, an AI agent can be the first line of defense, answering questions, running deployments, watching metrics, taking mitigation steps, and summarizing an outage or related event.

- SRE - Creating and translating cloud deployment, monitoring health and reacting to issues.

- TPM - AI agents can be intermediaries collecting, interpreting, and presenting progress and risks. What’s more they’re available 24/7 with real-time data, accelerating project cycle.

Customer Support - Strives to provide delightful customer experience and earn repeat business. AI bot can eliminate wait-times, avoid having to repeat context, have the telemetry to diagnose customer’s issue and immediately consult vast knowledge and procedures to remediate and support instantly.

Data - The primary job is to derive insights from data that help business decision markers drive growth. Yet, the data-team is often playing a supportive role - being overwhelmed with support tickets, transforming and monitoring data pipelines, and creating reports. Software agents free up the the team to focus on driving growth: data collection and experimentation, optimization, and deriving and testing causal insights.

Lead Generation: AI agent Sales agent can scour and qualify 1000x more candidates than a workforce.

Properties of a production-grade AI Worker

Sticking with the theme of anthropomorphizing AI software, we want it to exhibit these key human-like characteristics.

- Productive: Reliably carry-out multi-step, long-range workflows autonomous but understand its limitations and ask for HiTL help when needed

- Goal-oriented: They know what matters, over a long time-horizon, and can effectively plan its work to achieve a programmed objective

- Versatile: Work across interfaces and apps humans do (range of modalities)

- Knowledgable: It should come with a Bachelors degree - Know standards of the domain ****and its job function. ****

- Learning: Get better with experience by learning peculiarities of the organization, and tribal-knowledge of its employees

- Supervisable: Limit its agency around business policies and practices; be auditable and reproducible.

- Good Citizen: Comply with data security and governance policies

- Self-Aware: AI’s ability to understand and articulate its confidence bounds, its own limitations of knowledge and reasoning, is a critical component in getting it to reliably interact in the world autonomously.

Our Blueprint

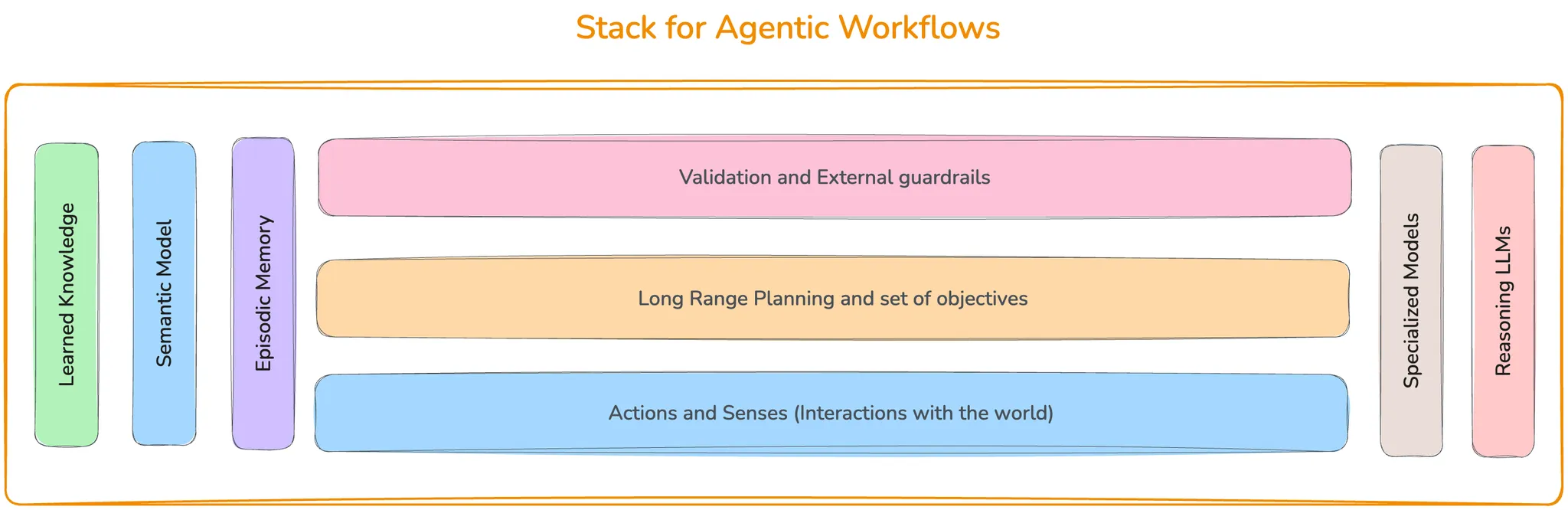

The overall framework is akin to ‘inference time compute’, but with explicit controls. We will dive deeper into each component in next few posts.

Planner: A planner module is responsible for reasoning and planning actions to achieve a well-defined objective. The approaches we have tried range from fine-tuning to understand time and causal relationships, to applying Symbolic-formalism and logic engine to create a hybrid - LLM reasoning in a well-defined and constrained domain. One of the more complex challenges with planning is understanding its confidence bounds. Fully probabilistic generative models, such as Bayesian inference, fully entail the output distribution. MLE approaches like autoregressive LLMs collapse estimates to points and lose the bounds — however, there is research and approaches to make it understand its own limitations.

Objective: Long horizon objective specification is itself a tough problem because we want to make it as easy and natural as possible to provide tasks. We can use either qualitative statements, specific KPIs, or a mathematical equations.

Memory: Our system needs a working-memory to store intermediate results and thoughts, but also repository of learned behavior from acting in the field. We typically use embeddings or adapter-based weights to provide external memory.

Semantic Model: In order to generalize, our agents need to understand nuances of the domain, ranging from language and terminology, relationships between artifacts, to specialized tribal-knowledge. Again, fine-tuning or adapters work well, but the main challenge is to make it easy to provide semantic data in the first place.

Actions: Actions govern how agents interacts with the world. We need design patterns and standards that handle both deterministic and non-deterministic action results, as well as enforce idempotency for actions given AI’s multi-shot approach.

Validators: Injective validation step into planning improves reliability by providing a 3rd party opinion on the solution. We also want to double-check that solution meets our policy and rules. Ideally, we want asymmetry between solution and validation. We want validation to be cheap to compute even when planning-action is long and expensive.

Specialized Models: Many of the components require specializing models to either imbue with use-case specific knowledge and reasoning capabilities, or to compress and manage latency and costs.

Creating AI Agent Workers that mimic long-range human workflows is quite challenging. The core challenge is acting reliably, predictably, and in compliance. We are just scratching the surface, but it is already exciting to see that common design patterns are starting to emerge.