PyTorch Design Philosophy

This is an ongoing note around Pytorch and its quirks.

PyTorch is a library for Neural Net model building using Python. It provides:

a) an array-based programming model, known as Tensors

b) an automatic differentiation engine, utilizing the chain-rule of differentiation

c) hardware acceleration through cuDNN, parallelization, and graph optimizers

d) a large library for common neural net model building constructs

e) Pythonic programming environment

It has been popularized and matured to a state where it is heavily used in research and production, while handling GPU interfacing and parallelization for large-scale DNNs on multiple GPUs clusters.

Design Philosophy

The overarching design philosophy is to preserve developer efficiency while being suitable for production environments: this requires portability and efficiency.

Python Ecosystem

Using imperative and familiar Python language. This has an additional benefit of developer having access to Python's numerical computation libraries like NumPy, SciPi, and Pandas, debuggability constructs, and developer tooling.

Its design principles also ensures interoperability with Python ecosystem, for example, automatic efficient translation between Numpy and Pytorch tensors.

Eager execution

Pytorch programs execute in eager or graph mode. Eager mode is quite intuitive and follows immediate execution of statements. All features of python work intuitively, but with the drawback that execution graph may not be optimal.

Graph mode, on the other hand, defers the evaluation of statement until a compilation directive. Compilation function has access to the full computation graph and can optimize the execution better, similar to the role of code compilers.

Eager-mode is great for research, experimentation, and debugging, while graph-mode is great for efficient execution.

Performance

Despite its Pythonic experience, most core libraries are written and optimized in C++ for efficient execution. libtorch implements datastructure, CPU and GPU optimized libraries and parallelization routines. It also bypasses Python's global interpreter lock to support multithreaded execution. Moreover they have build additional optimiztions to improve performance such as splitting control and dataflow, caching tensor allocators, custom multiprocessing library, reference counting, and more.

Drawbacks

One of the drawbacks of using a dynamic language like Python is that we don't get static typing. Tensors carry dimensions which must align when operations are composed. A typing system that understands dimensionality would prevent runtime mis-matches while making the code succinct.

PyTorch Library Namespaces

Here are core libraries that are extremely useful when building PyTorch models:

- torch - tensors, functions, modules

- torch.nn - layers, optimizers, loss functions

- torch.optim - optimization algorithms: sgd, adam, adagrad

- torch.utils - data utility

- torch.distributed - multi-gpu and multi node distributed training

- torch.autograd - automatic differentiation

- torchvision - vision utilities: pretrained models, datasets, image transforms

- torchtext - nlp datasets, tokenizers, vocabs

Graph Execution

import torch

class AddModel(torch.nn.Module):

def __init__(self):

super().__init__()

self.add = torch.nn.functional.add

def forward(self, x, y):

return self.add(x, y)

# Create a model

model = AddModel()

# Set some input values

x = torch.tensor(1.0)

y = torch.tensor(2.0)

# Compute the output

output = model(x, y)

# Print the output

print(output)

# Create a model

model = AddModel()

# Export the model to graph mode

graph_model = torch.jit.trace(model)

# Set some input values

x = torch.tensor(1.0)

y = torch.tensor(2.0)

# Compute the output

output = graph_model(x, y)

# Print the output

print(output)While they both output 3.0 the difference is in the way models are executed.

TorchScript

TorchScript solves the problems around portability and performance that arise from limitations due to Python-only nature of earlier Pytorch implementation.

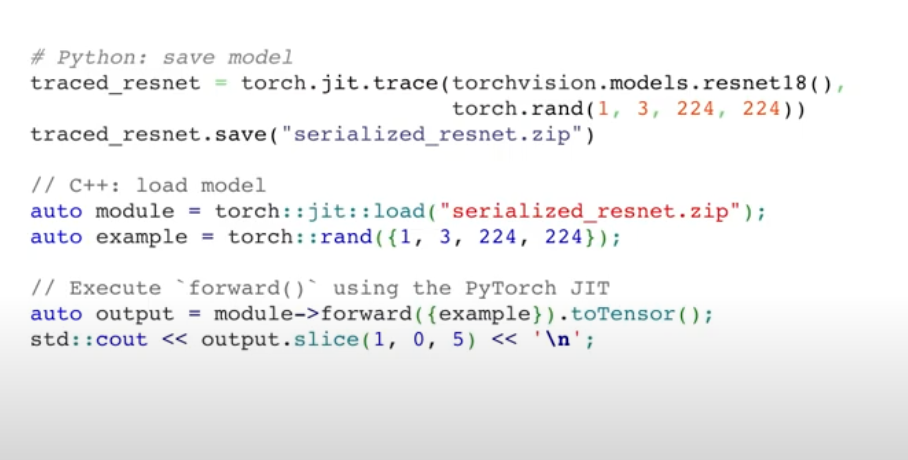

Portability involves exporting the execution graph to be run outside of python interpreter, for example, c++ server, mobile or embedded device. This allows models to be written in Python but optimized and deployed elsewhere.

Performance involves improving latency and throughput through additional optimization opportunities that what Python supports.

This problem is unfolded into:

- Capture the structure of PyTorch program

- Use the structure to further optimize the graph

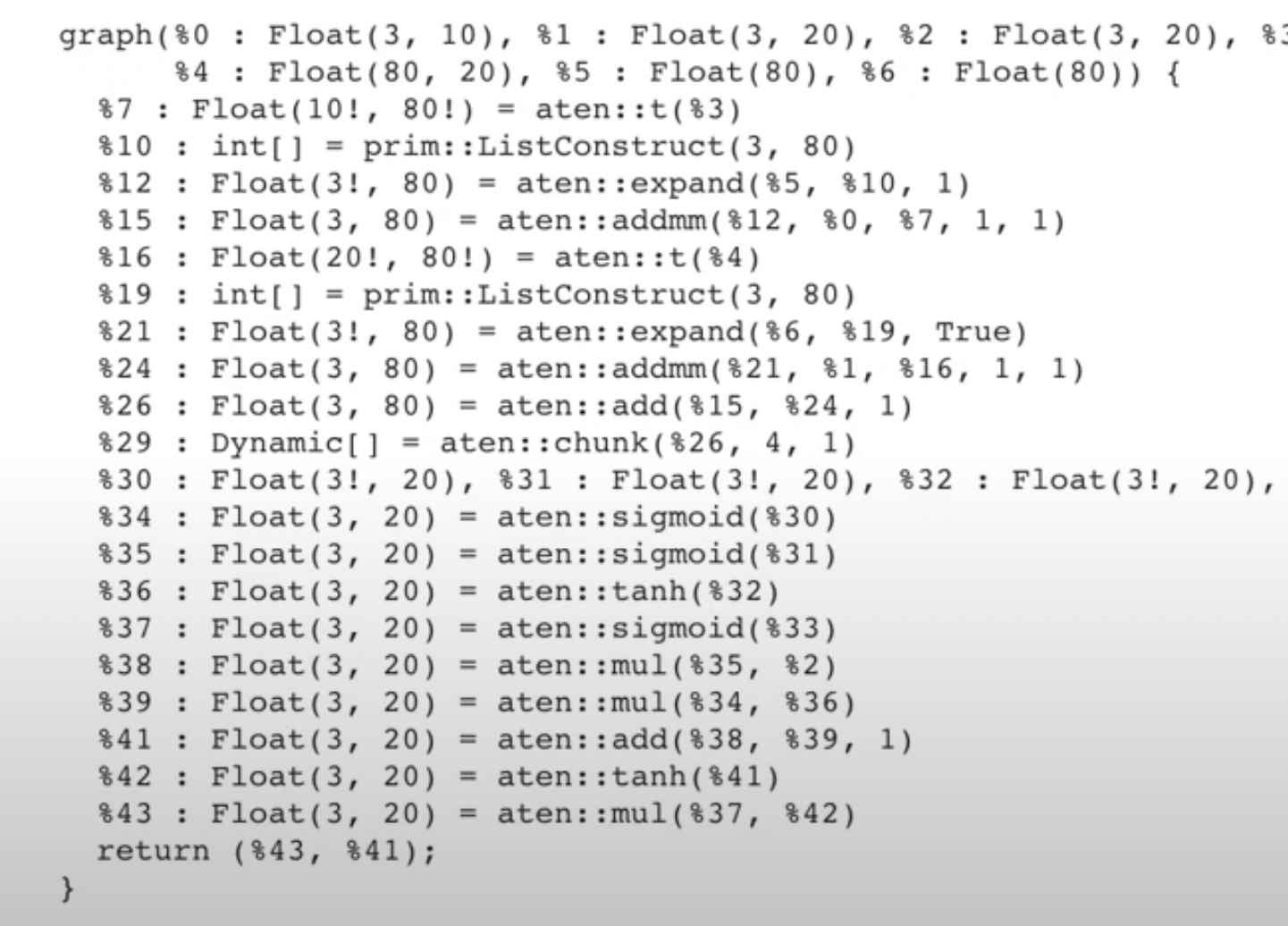

Script Mode

Eager mode runs in PyTorch runtime, whereas script mode runs in custom TorchScript runtime. Eager mode is ideal for prototyping and debugging, while Script mode is optimized for production deployment.

It provides a couple of compilation apis:

- torch.jit.trace() --> tracers tensor oeprations, but does not capture control flow

- torch.jit.script() --> lex and parse python code and converts to torch script. This preserves control flow, data structure and can be seen as an Intermediate Representation (IR) language

It allows for static typing, structured control flow, and functional semantics (easy to reason about transformations and optimization)

This also allows portability as scripted models can be saved and loaded in a different runtime, for example:

The graph-mode and script's runtime allows for various graph optimization, including the most common:

- algebraic rewriting

- out of order execution

- operator fusion

- platform-dependent code generation