What is AGI and Super-intelligence

I’ve come across technologists in two different camps:

Camp A talks about AGI like a milestone: “AGI will be achieved”, or that “No way will we achieve AGI with current algorithms”.

Camp B considers AGI or Superintelligence as an ill-defined term, therefore purely a marketing play.

I want to share a simple way of thinking about these terms - they’re not an end-goal, but rather a direction for building “intelligent machines”. But first, lets clarify what we mean by intelligence.

What is Intelligence?

Intelligence, in a problem-solving sense, is a measure of efficiency with which one can solve new problems.

“The skill-acquisition efficiency across tasks, with respect to priors, experience, and generalization difficulty.”

There are various formal metrics around information and energy efficiency with which new unseen problems can be solved, however you get the general idea. These are also the rubric for building ARC-AGI benchmark where each problem poses a new set of skills to learn before it can be solved.

One qualifier to add here is that the space of tasks to consider is: “all tasks valuable to humans, economically or otherwise”. Whether it is making a sandwhich, catching a ball, telling a joke, etc. They’re all tasks people find useful.

What is AGI and Superintelligence?

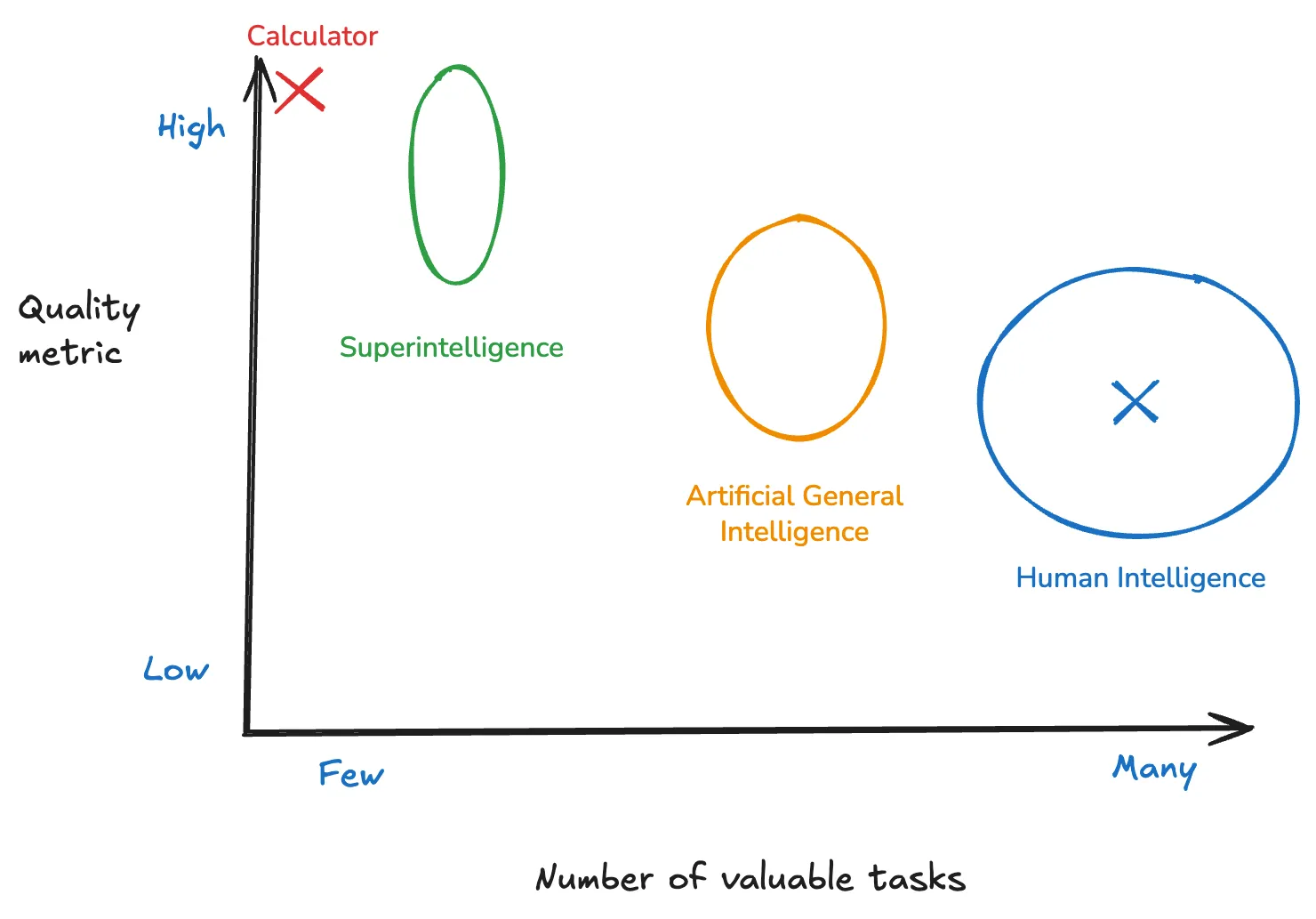

A calculator is exceptional at one task: number-crunching; however it lacks any generalization. It is skilled, but not intelligent.

In my view, AGI or Superintelligence provide directionality only. AGI focuses on generalization of intelligence to many valuable tasks, while being at least on par with human skills on the given tasks. Superintelligence trades-off generalization with stronger intelligence on fewer valuable tasks. Regardless, the advancement of ‘intelligence’ is to generalize and carry out more and more tasks, some of which were never seen before.

Why do these monikers matter?

This distinction is crucial in terms of AI Research initiatives. Super-Intelligene can be pursued using known techniques of RL-based reasoning and crafting highly accurate reward-functions on various domains - coding, math, science, etc. Whereas AGI, in a sense of close to human-intelligence, may require more scientific innovation.

Interestingly, there are many attempts underway by AI research labs to build task-by-task intelligence (aka Agents), and stitching them together to give perception of generalization, under the veil of pursuing AGI. While they’ll fail to generalize to human-level (remember Bitter Lesson by Rich Sutton), they’ll be economically valuable enough that investors won’t care.